Hello MRtrix community,

I am attempting to use the dStripe module for MRtrix developed by @maxpietsch

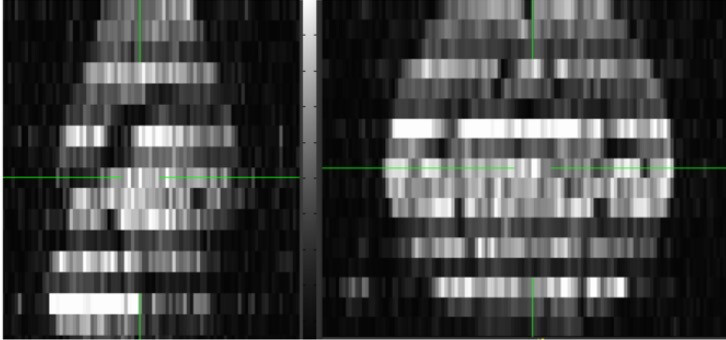

( GitHub - maxpietsch/dStripe: This repository contains code and model weights for the method described in the paper "dStripe: slice artefact correction in diffusion MRI via constrained neural network" https://doi.org/10.1016/j.media.2021.102255 ), to deal with issues in mouse diffusion data. Example of the phenomena in data:

I see that @jdtournier also created a fork of this repository a couple days ago ( GitHub - jdtournier/dStripe: This repository contains code and model weights for the method described in the paper "dStripe: slice artefact correction in diffusion MRI via constrained neural network" https://doi.org/10.1016/j.media.2021.102255 ), and I am building my Docker image based on this updated repository.

Specifically, I am running into issues in the eval_stripes3.py portion of this module.

Here is the command issued:

sudo docker run --rm --volume /mouse/dGAG_P2W/Output_Results/Diffusion/Mondo_Output/T24_RE_P2W/Diffusion/Preproc/:/data dstripe \

dwidestripe \

data/T24_RE_P2W_raw_b.nii.gz \

data/automask.nii.gz \

data/raw_b_dstripe_field.mif \

-fslgrad data/large_b.bvec data/large_b.bval \

-info \

-nthreads 16 \

-device cpu

Here is the error text:

/dwidestripe-tmp-57BVJ0/eval_dstripe_2019_07_03-31_v2/dstripe_2019_07_03-31_v2.pth.tar.json_val

Traceback (most recent call last):

File "/opt/dStripe/dstripe/eval_stripes3.py", line 599, in <module>

S = mirrorpadz(ten2prec(sample['source']), nmirr=nmirr, mode='reflect').to(device)

File "/opt/dStripe/dstripe/eval_stripes3.py", line 116, in mirrorpadz

return F.pad(im_o.view(1, shape[0] * shape[1], -1, shape[-1]), p, mode, value=value).view(*shape_padded)

File "/opt/env/lib/python3.7/site-packages/torch/nn/functional.py", line 2171, in pad

return torch._C._nn.reflection_pad2d(input, pad)

RuntimeError: invalid argument 4: Padding size should be less than the corresponding input dimension, but got: padding (17, 17) at dimension 3 of input [1 x 1 x 12288 x 17] at /opt/conda/conda-bld/pytorch_1533739672741/work/aten/src/THNN/generic/SpatialReflectionPadding.c:89

dwidestripe: /opt/env/bin/python3 /opt/dStripe/dstripe/eval_stripes3.py /opt/dStripe/models/dstripe_2019_07_03-31_v2.pth.tar.json nn/amp.mif nn/mask.mif --butterworth_samples_cutoff=0.65625 --outdir=/dwidestripe-tmp-57BVJ0/ --verbose=0 --batch_size=1 --write_field=true --write_corrected=false --slice_native=false --attention --nthreads=16 --device=cpu

Using mrinfo on my data shows the dimensions are [128 x 96 x 17 x 47]. This is accurate and I can confirm that my data contain 47 diffusion weighted volumes. The 17 corresponds to the coronal plane of the mouse brain, which indeed has thick slices.

I am under the impression that my image is being flatted across 2 of the dimensions, as the tensor being passed to pytorch is [1 x 1 x 12288 x 17] but I believe it should be [1 x 1 x 128 x 96 x 17]. My understanding is that the first 2 channels 5 dimension tensor correspond to batch size and channels (e.g. color channels), which are both 1 in this case, so those should be OK.

Any insights into this problem would be much appreciated!

I am happy to provide additional information as needed.

Cheers,

-Brad