Hello,

I am running a whole brain FBA on a large subject cohort (n~700), looking for correlations between logFC and a cognitive score, controlling for sex and handedness (age is accounted for in the cognitive score). My template was made from 40 subjects chosen to represent a wide range of ages among participants.

I get two warnings. The first was that [WARNING] A total of 31890 fixels do not possess any streamlines-based connectivity; these will not be enhanced by CFE, and hence cannot be tested for statistical significance. I’ve seen this discussed elsewhere, but this number seems especially high, given there are 517176 fixels in the template space. Is this something I should be particularly concerned about, and if so, what do you suggest for QC?

The second, is Design matrix conditioning is poor (condition number: 699.547); model fitting may be highly influenced by noise.

My design matrix is a text file in which each line looks like:

“0 METRIC COVAR1 COVAR2” (without "s, values filled in) and is specific for a subject.

And the contrasts is a one line text file containing: “0 1 0 0” (without "s) to run a regression against the metric at the fixed level of two covariates.

Similar to the first point, is this warning something I should be concerned about?

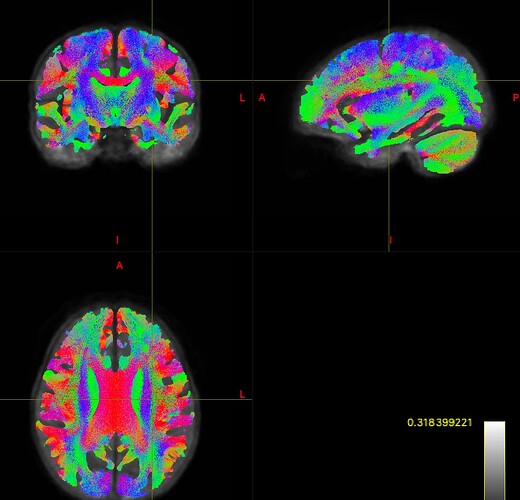

I did my best to do manual QC given the large cohort size. I ran TractSeg on the FOD template and got good looking results. Before beginning FBA I removed subjects with poor brain masks (missing brain, or brain mask not defined), and after warping to template I removed subjects with poor warps to template space. The 2million count SIFT-ed tractogram looks complete too (see pic below).

My last concern is that I run into out of memory issues after devoting 256 GB of memory to the process. Do you have any advice for memory concerns in large cohorts?

Any guidance is appreciated, thank you.

Steven